My personal favorite AI system right now is ChatGPT. I use it often enough that I even signed up for a paid account. Mostly my “chats” are about organizing my thoughts, advice on interpersonal matters, and silly image creation. However, more and more I’ve been using as another tool in my tool chest for managing and advocating for my health.

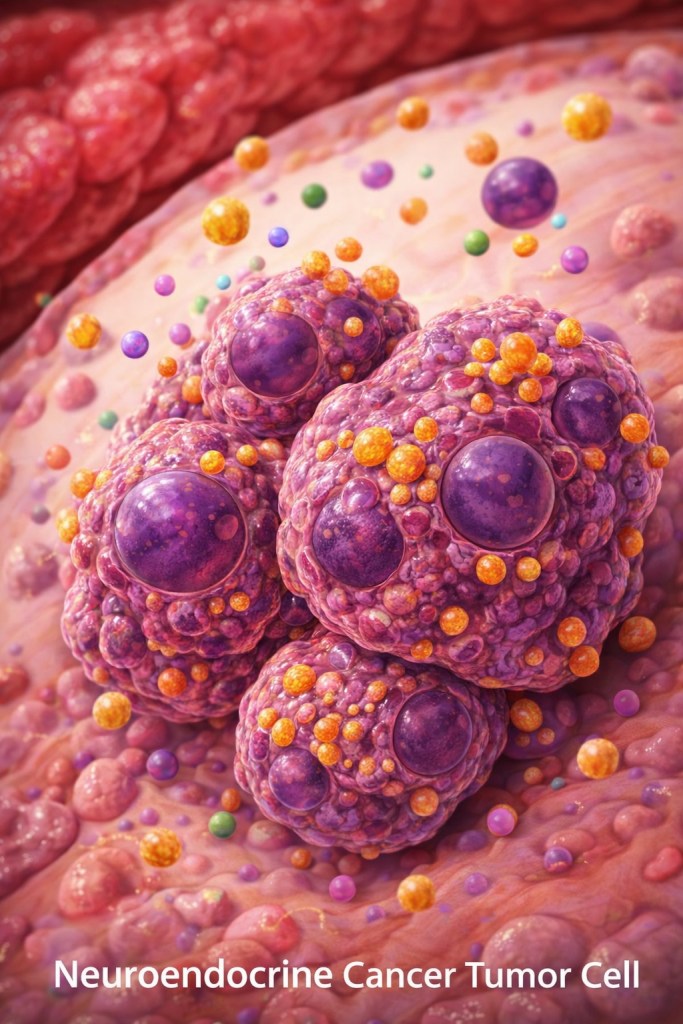

Although I already knew chats would be higher quality if they were prefaced in a specific way, for example “I want you to be an expert in teen brain and emotional development. My teen daughter said (blank) and I need advice on how to respond in a supportive way”, it didn’t occur to me to do the same thing with health questions. A friend of mine whom I know through the neuroendocrine cancer (aka Neuroendocrine Tumors aka NETs) community is a big user of ChatGPT, and he let me know that a feature of paid accounts is that you can create a custom-made GPT to focus on a specific topic. He created one called “NET Sherpa” that has tons of NET resources and expertise built into it. Here is the link if you want to try it out.

Once I learned ChatGPT could do that kind of thing, I started playing around with it a lot more. For example, here are a few real health-related prompt examples I’ve fed it in the last few months:

- Where is the thinnest skin on the body?

- Why does my colon keep cramping, even when it’s empty?

- If you’re having a big abdominal surgery, what happens if you get a perforated bowel?

- What does “TOTAL CO2, PLASMA (LAB)” mean?

Of course, I still do my due diligence to compare the answers to other, reputable sources. Personally, I have found that ChatGPT gets things right about 85-90% of the time. It’s really mind-blowing, actually! We’re living in the future! The rather frightening part though is that ChatGPT acts incredibly confident that it is right 100% of the time. I’m worried that most people won’t be able to tell when ChatGPT is correct and when it isn’t unless they are already an expert on the subject. Famous very smart scientist Neil deGrasse Tyson recently made some really interesting comments about this.

This week I decided to feed ALL my medical records into ChatGPT just to see what would happen (it took quite a while, with a ton of downloading from MyChart and then uploading into the chat). I was reassured to see that it came up with the same diagnosis and care plan that I already have in place. What was new, however, is that after I uploaded all my medical data, I asked ChatGPT “What are some questions I should ask at my upcoming oncologist appointment?” It provided several really, really good suggestions that I hadn’t thought of yet, and that I’m definitely going to use.

I consider myself a fairly educated NET patient at this point, but I’m always looking for more ways to have informed, educated conversations with my oncologist about my health and care plan. I’m glad to have ChatGPT as an additional resource.

Please understand that ChatGPT is only one tool is my heath toolbox. I have an amazing team of oncologists and doctors that I trust. I have read books and journal articles, listened to podcasts, watched interviews, and done a LOT of my own research. I participate in support groups. I have a relative and a best friend who are real doctors and who generously give me lots of solid advice. So if you’re reading this, please don’t think I’m recommending ChatGPT as a main source of information and/or advice, because I’m not. Also, please keep in mind that ChatGPT is very, very wrong sometimes (it “confidently hallucinates”). Use all AI systems with a grain of salt!

Leave a comment